Principled Out-of-Distribution Detection via Multiple Testing

Updated: 2023-12-31 22:02:12

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Principled Out-of-Distribution Detection via Multiple Testing Akshayaa Magesh , Venugopal V . Veeravalli , Anirban Roy , Susmit Jha 24(378 1 35, 2023. Abstract We study the problem of out-of-distribution OOD detection , that is , detecting whether a machine learning ML model's output can be trusted at inference time . While a number of tests for OOD detection have been proposed in prior work , a formal framework for studying this problem is lacking . We propose a definition for the notion of OOD that includes both the input distribution and the ML model , which provides insights for the construction

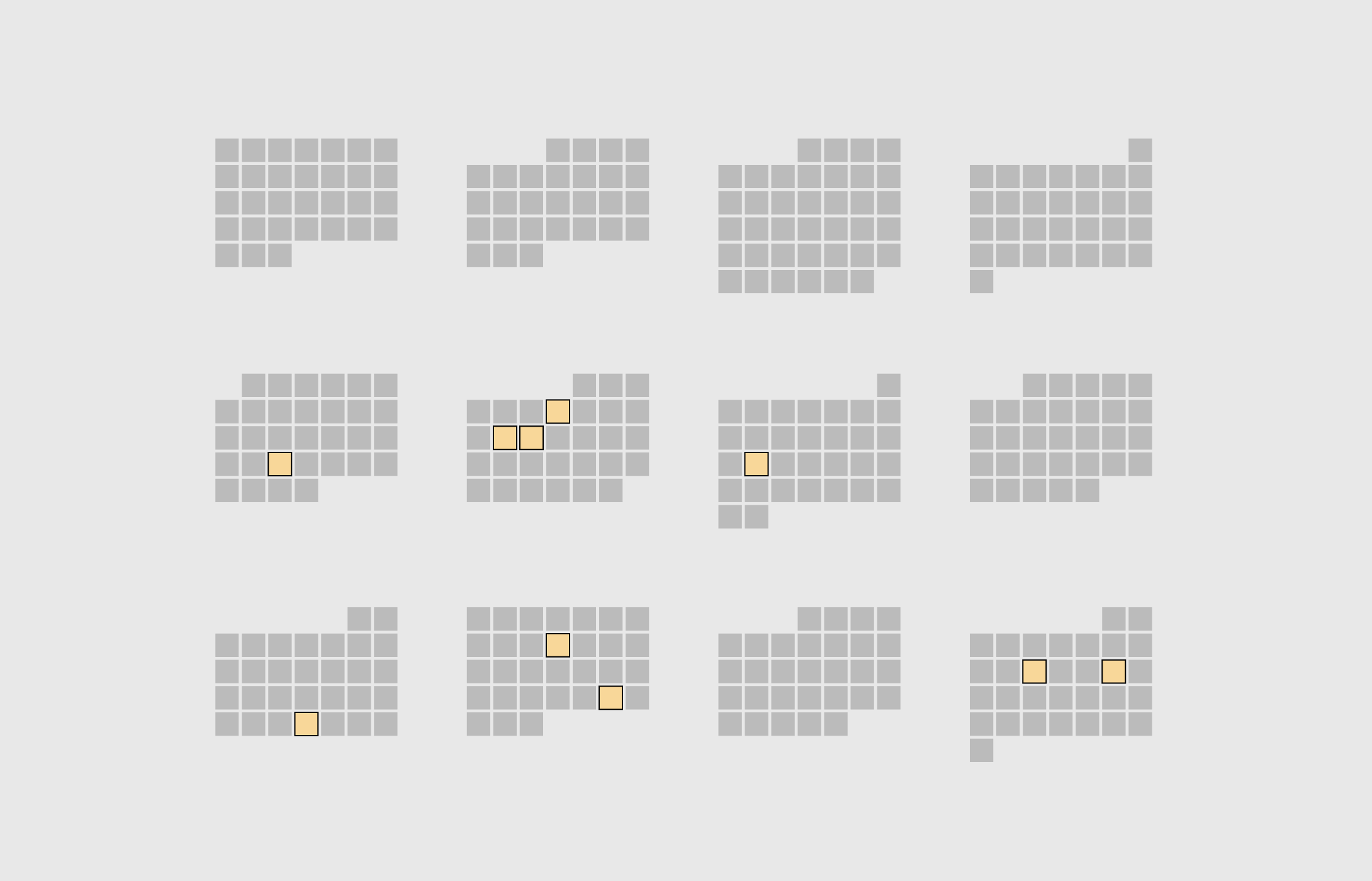

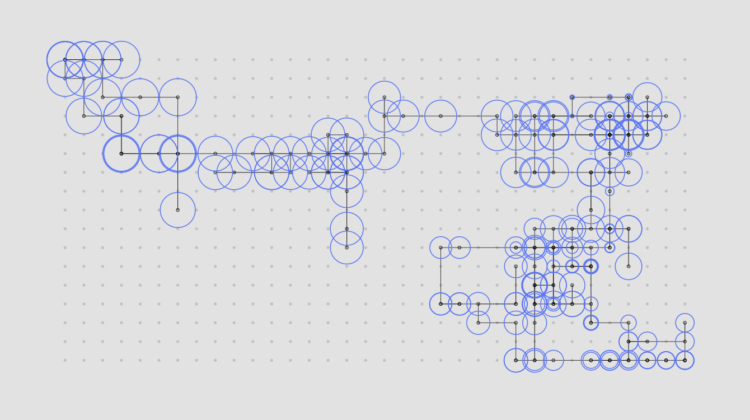

Membership Tutorials Courses Projects Newsletter Become a Member Log in Visualization best-of Best Data Visualization Projects of 2023 By Nathan Yau Data continues on its upwards trajectory and with it comes the importance of visualization . Many charts were made in 2023. If I liked something , it was on FlowingData These are my ten favorites from the . year Alvin Chang , for The Pudding 24 hours in an invisible epidemic This was a standout for me . I mess with data from the American Time Use Survey pretty much every year and Alvin’s project still caught me off guard . See the Project On FlowingData Lauren Leatherby , for The New York Times How a Vast Demographic Shift Will Reshape the World Population data . It’s another dataset we’ve seen many times , but I enjoyed the focus on age

Membership Tutorials Courses Projects Newsletter Become a Member Log in Visualization best-of Best Data Visualization Projects of 2023 By Nathan Yau Data continues on its upwards trajectory and with it comes the importance of visualization . Many charts were made in 2023. If I liked something , it was on FlowingData These are my ten favorites from the . year Alvin Chang , for The Pudding 24 hours in an invisible epidemic This was a standout for me . I mess with data from the American Time Use Survey pretty much every year and Alvin’s project still caught me off guard . See the Project On FlowingData Lauren Leatherby , for The New York Times How a Vast Demographic Shift Will Reshape the World Population data . It’s another dataset we’ve seen many times , but I enjoyed the focus on age , Membership Tutorials Courses Projects Newsletter Become a Member Log in Members Only Visualization Tools and Learning Resources , December 2023 Roundup December 28, 2023 Topic The Process roundup Welcome to The Process the newsletter for FlowingData members where we look closer at how the charts get made . I’m Nathan Yau . Every month I collect useful tools and resources to help you make better charts . Here’s the last roundup of 2023. To access this issue of The Process , you must be a . member If you are already a member , log in here See What You Get The Process is a weekly newsletter on how visualization tools , rules , and guidelines work in practice . I publish every Thursday . Get it in your inbox or read it on FlowingData . You also gain unlimited access to hundreds of hours

, Membership Tutorials Courses Projects Newsletter Become a Member Log in Members Only Visualization Tools and Learning Resources , December 2023 Roundup December 28, 2023 Topic The Process roundup Welcome to The Process the newsletter for FlowingData members where we look closer at how the charts get made . I’m Nathan Yau . Every month I collect useful tools and resources to help you make better charts . Here’s the last roundup of 2023. To access this issue of The Process , you must be a . member If you are already a member , log in here See What You Get The Process is a weekly newsletter on how visualization tools , rules , and guidelines work in practice . I publish every Thursday . Get it in your inbox or read it on FlowingData . You also gain unlimited access to hundreds of hours Membership Tutorials Courses Projects Newsletter Become a Member Log in Members Only Fun With Data December 21, 2023 Topic The Process fun Welcome to The Process the newsletter for FlowingData members that looks closer at how the charts get made . I’m Nathan Yau . With a few days left until Christmas , I suspect you have other things to do , but here are some fun things to do with data in case you’re looking for a distraction to pull you towards the . weekend To access this issue of The Process , you must be a . member If you are already a member , log in here See What You Get The Process is a weekly newsletter on how visualization tools , rules , and guidelines work in practice . I publish every Thursday . Get it in your inbox or read it on FlowingData . You also gain unlimited access to

Membership Tutorials Courses Projects Newsletter Become a Member Log in Members Only Fun With Data December 21, 2023 Topic The Process fun Welcome to The Process the newsletter for FlowingData members that looks closer at how the charts get made . I’m Nathan Yau . With a few days left until Christmas , I suspect you have other things to do , but here are some fun things to do with data in case you’re looking for a distraction to pull you towards the . weekend To access this issue of The Process , you must be a . member If you are already a member , log in here See What You Get The Process is a weekly newsletter on how visualization tools , rules , and guidelines work in practice . I publish every Thursday . Get it in your inbox or read it on FlowingData . You also gain unlimited access to It’s a different kind of podcast this week: Simon and Alberto talk about Alberto’s latest book, The Art of Insight, why data journalism is still a dream job and our approaches to working with numbers to tell stories. Find out what books got us here – and what we care about most, when it comes … Continue reading →

It’s a different kind of podcast this week: Simon and Alberto talk about Alberto’s latest book, The Art of Insight, why data journalism is still a dream job and our approaches to working with numbers to tell stories. Find out what books got us here – and what we care about most, when it comes … Continue reading →